Video Streaming Web Server

by mjrovai in Circuits > Raspberry Pi

46782 Views, 86 Favorites, 0 Comments

Video Streaming Web Server

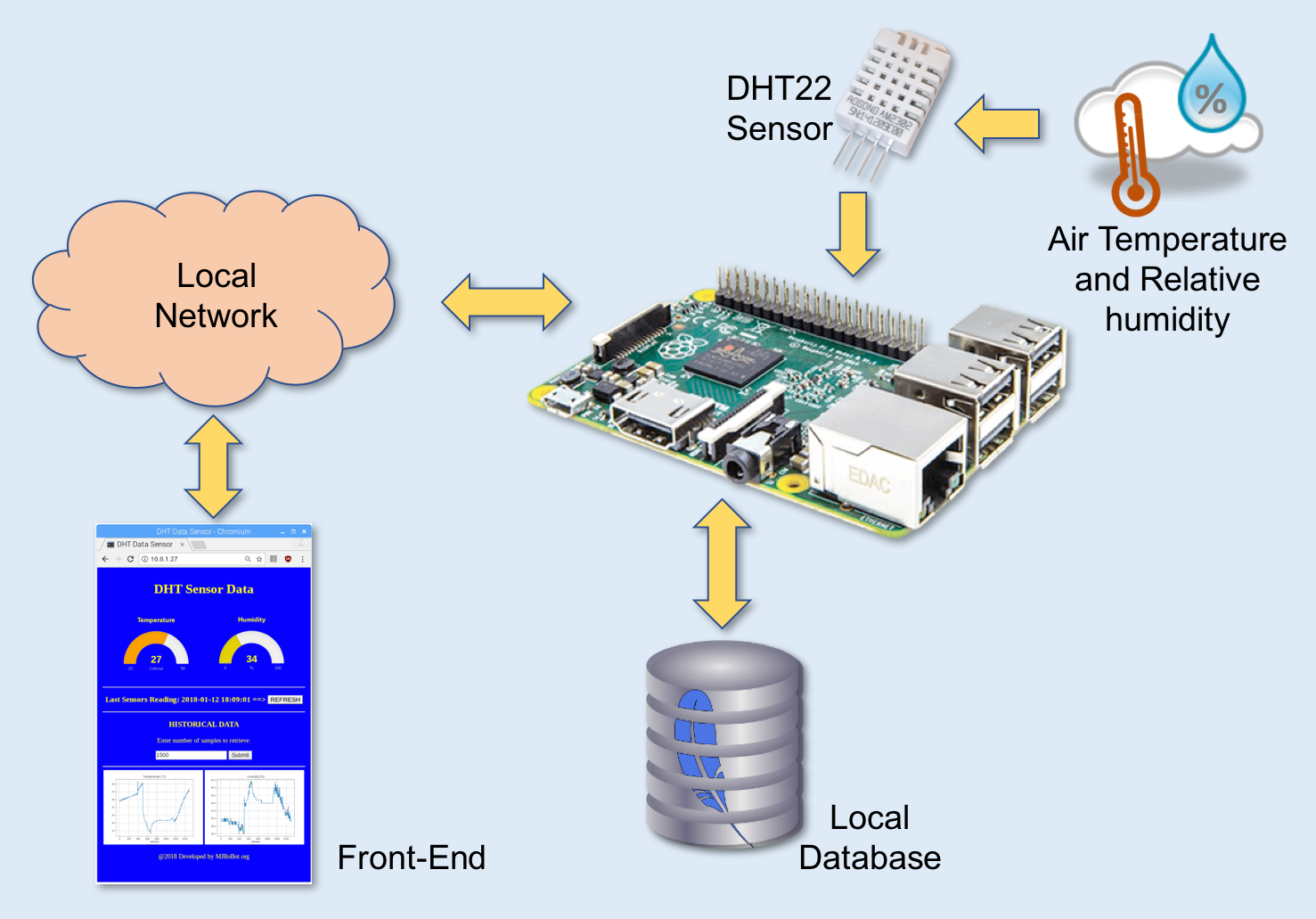

On my last tutorial, FROM DATA TO GRAPH. A WEB JOURNEY WITH FLASK AND SQLITE, we learned how to:

- Capture real data (air temperature and relative humidity) using a DHT22 sensor;

- Load those data on a local database, built with SQLite;

- Create graphics with historical data using Matplotlib;

- Display data with animated "gages", created with JustGage;

- Make everything available online through a local web-server created with Python and Flask;

Now we will learn how to

- Streaming a video,

- Integrating the video on a Web Server.

BoM - Bill of Material

- Raspberry Pi V3 - US$ 32.00

- Raspberry Pi 3 model B NoIR Night camera (*) - USD10.00

- DHT22 Temperature and Relative Humidity Sensor (**)- USD 9.95

- Resistor 4K7 ohm

(*) I used on this project a Night Camera, but a normal PiCam (or any USB camera) can be used.

(**) The DHT22 is optional. I will show how to integrate the Video Streaming to WE Page that shows historical data from sensors.

Installing the Camera

1. With your RPi turned-off, install the Camara on its special port as shown below:

2. Turn on your Pi and go to Raspberry Pi Configuration Tool at main menu and verify if Camera Interface is enabled:

If you needed to Enabled it, press [OK] and reboot your Pi.

Make a simple test to verify if everything is OK:

raspistill -o /Desktop/image.png

You will realize that an image icon appears on your Rpi desktop. Click on it to open. If an image appears, your Pi is ready to stream video! If you want to know more about the camera, visit the link: Getting started with picamera.

Instaling Flask

There are several ways to stream video. The best (and "lighther") way to do it that I found was with Flask, as developed by Miguel Grinberg. For a detailed explanation about how Flask does this, please see his great tutorial: flask-video-streaming-revisited.

On my tutorial: Python WebServer With Flask and Raspberry Pi, we learned in more details how Flask works and how to implement a web-server to capture data from sensors and show their status on a web page. Here, on the first part of this tutorial, we will do the same, only that the data to be sent to our front end, will be a video stream.

Creating a web-server environment:

The first thing to do is to install Flask on your Raspberry Pi. If you do not have it, go to the Terminal and enter:

sudo apt-get install python3-flask

The best when you start a new project is to create a folder where to have your files organized. For example:

from home, go to your working directory:

cd Documents

Create a new folder, for example:

mkdir camWebServer

The above command will create a folder named "camWebServer", where we will save our python scripts:

/home/pi/Documents/camWebServer

Now, on this folder, let's create 2 sub-folders: static for CSS and eventually JavaScript files and templates for HTML files. Go to your newer created folder:

cd camWebServer

And create the 2 new sub-folders:

mkdir staticand

mkdir templates

The final directory "tree", will look like:

├── Documents

├── camWebServer

├── templates

└── staticOK! With our environment in place let's create our Python WebServer Application to stream video.

Creating the Video Streaming Server

First, download Miguel Grinberg's picamera package: camera_pi.py and save it on created directory camWebServer. This is the heart of our project, Miguel did a fantastic job!

Now, using Flask, let's adapt the original Miguel's web Server application (app.py), creating a specific python script to render our video. We will call it appCam.py:

from flask import Flask, render_template, Response

# Raspberry Pi camera module (requires picamera package, developed by Miguel Grinberg)

from camera_pi import Camera

app = Flask(__name__)

@app.route('/')

def index():

"""Video streaming home page."""

return render_template('index.html')

def gen(camera):

"""Video streaming generator function."""

while True:

frame = camera.get_frame()

yield (b'--frame\r\n'

b'Content-Type: image/jpeg\r\n\r\n' + frame + b'\r\n')

@app.route('/video_feed')

def video_feed():

"""Video streaming route. Put this in the src attribute of an img tag."""

return Response(gen(Camera()),

mimetype='multipart/x-mixed-replace; boundary=frame')

if __name__ == '__main__':

app.run(host='0.0.0.0', port =80, debug=True, threaded=True)

The above script streams your camera video on an index.html page as below:

<html>

<head>

<title>MJRoBot Lab Live Streaming</title>

<link rel="stylesheet" href='../static/style.css'/>

</head>

<body>

<h1>MJRoBot Lab Live Streaming</h1>

<h3><img src="{{ url_for('video_feed') }}" width="90%"></h3>

<hr>

<p> @2018 Developed by MJRoBot.org</p>

</body>

</html>

The most important line of index.html is:

<img src="{{ url_for('video_feed') }}" width="50%">

There is where the video will be "feed" to our web page.

You must also include the style.css file on the static directory to get the above result in terms of style.

All the files can be downloaded from my GitHub: camWebServer

Ony to be sure that everything is in the right location, let's check our environment after all updates:

├── Documents

├── camWebServer

├── camera_pi.py

├── appCam.py

├── templates

| ├── index.html

└── static

├── style.css

Now, run the python script on the Terminal:

<p>sudo python3 appCam.py</p>

Go to any browser in your network and enter with http://YOUR_RPI_IP (for example, in my case: 10.0.1.27)

NOTE: If you are not sure about your RPi Ip address, run on your terminal:

ifconfig

at wlan0: section you will find it.

The results:

That's it! From now it is only a matter to sophisticate a page, embedded your video on another page etc.

Installing the Temperature & Humidity Sensor

Now it is time to see how to call a video scream form a web page. Let's create a page that uses real data like air temperature and relative humidity. For that, we will use the old and good DHT22. The ADAFRUIT site provides great information about those sensors. Bellow, some information retrieved from there:

Overview

The low-cost DHT temperature & humidity sensors are very basic and slow but are great for hobbyists who want to do some basic data logging. The DHT sensors are made of two parts, a capacitive humidity sensor, and a thermistor. There is also a very basic chip inside that does some analog to digital conversion and spits out a digital signal with the temperature and humidity. The digital signal is fairly easy to be read using any microcontroller.

DHT22 Main characteristics:

- Low cost

- 3 to 5V power and I/O

- 2.5mA max current use during conversion (while requesting data)

- Good for 0-100% humidity readings with 2-5% accuracy

- Good for -40 to 125°C temperature readings ±0.5°C accuracy

- No more than 0.5 Hz sampling rate (once every 2 seconds)

- Body size 15.1mm x 25mm x 7.7mm

- 4 pins with 0.1" spacing

Once usually you will use the sensor on distances less than 20m, a 4K7 ohm resistor should be connected between Data and VCC pins. The DHT22 output data pin will be connected to Raspberry GPIO 16. Check above electrical diagram connecting the sensor to RPi pins as below:

- Pin 1 - Vcc ==> 3.3V

- Pin 2 - Data ==> GPIO 16

- Pin 3 - Not Connect

- Pin 4 - Gnd ==> Gnd

Do not forget to Install the 4K7 ohm resistor between Vcc and Data pins

Once the sensor is connected, we must also install its library on our RPi.

Installing DHT Library:

On your Raspberry, starting on /home, go to /Documents

cd Documents

Create a directory to install the library and move to there:

mkdir DHT22_Sensor cd DHT22_Sensor

On your browser, go to Adafruit GitHub:

https://github.com/adafruit/Adafruit_Python_DHT

Download the library by clicking the download zip link to the right and unzip the archive on your Raspberry Pi recently created folder. Then go to the directory of the library (subfolder that is automatically created when you unzipped the file), and execute the command:

sudo python3 setup.py install

Open a test program (DHT22_test.py) from my GITHUB

import Adafruit_DHT

DHT22Sensor = Adafruit_DHT.DHT22

DHTpin = 16

humidity, temperature = Adafruit_DHT.read_retry(DHT22Sensor, DHTpin)

if humidity is not None and temperature is not None:

print('Temp={0:0.1f}*C Humidity={1:0.1f}%'.format(temperature, humidity))

else:

print('Failed to get reading. Try again!')

Execute the program with the command:

python3 DHT22_test.py

The below Terminal print screen shows the result.

Creating a Web Server Application for Data and Video Display

Let's create another python WebServer using Flask, that will handle both, the data captured by sensors and a video stream.

Once our code is becoming more complex, I suggest Geany as the IDE to be used, once you can work simultaneously with different types of files (.py, .html and .css)

The code below is the python script to be used on our web-server:

from flask import Flask, render_template, Response

app = Flask(__name__)

# Raspberry Pi camera module (requires picamera package)

from camera_pi import Camera

import Adafruit_DHT

import time

# get data from DHT sensor

def getDHTdata():

DHT22Sensor = Adafruit_DHT.DHT22

DHTpin = 16

hum, temp = Adafruit_DHT.read_retry(DHT22Sensor, DHTpin)

if hum is not None and temp is not None:

hum = round(hum)

temp = round(temp, 1)

return temp, hum

@app.route("/")

def index():

timeNow = time.asctime( time.localtime(time.time()) )

temp, hum = getDHTdata()

templateData = {

'time': timeNow,

'temp': temp,

'hum' : hum

}

return render_template('index.html', **templateData)

@app.route('/camera')

def cam():

"""Video streaming home page."""

timeNow = time.asctime( time.localtime(time.time()) )

templateData = {

'time': timeNow

}

return render_template('camera.html', **templateData)

def gen(camera):

"""Video streaming generator function."""

while True:

frame = camera.get_frame()

yield (b'--frame\r\n'

b'Content-Type: image/jpeg\r\n\r\n' + frame + b'\r\n')

@app.route('/video_feed')

def video_feed():

"""Video streaming route. Put this in the src attribute of an img tag."""

return Response(gen(Camera()),

mimetype='multipart/x-mixed-replace; boundary=frame')

if __name__ == '__main__':

app.run(host='0.0.0.0', port =80, debug=True, threaded=True)

You can get the python script appCam2.py from my GitHub

What the above code does is:

- Every time that someone "clicks"'on "/", that is the main page (index.html) of our webpage a GET request is generated;

- With this request, the first thing done in the code is to read data from the sensor using the function getDHTdata().

- Next, the actual time is retrieved from system

- With the data on hand, our script returns to the webpage (index.html): time, temp and hum as a response to the previous request.

Also, a page "/camera" can be called when the user wants to see the video stream. In this case, the 3 routs as shown in the last step are called and the camera.html is rendered.

So, let's see the index.html, camera.html and style.css files that will be used to build our front-end:

index.html

<!doctype html>

<html>

<head>

<title>MJRoBot Lab Sensor Data</title>

<link rel="stylesheet" href='../static/style.css'/>

</head>

<body>

<h1>MJRoBot Lab Sensor Data</h1>

<h3> TEMPERATURE ==> {{ temp }} oC</h3>

<h3> HUMIDITY (Rel.) ==> {{ hum }} %</h3>

<hr>

<h3> Last Sensors Reading: {{ time }} ==> <a href="/"class="button">REFRESH</a></h3>

<hr>

<h3> MJRoBot Lab Live Streaming ==> <a href="/camera" class="button">LIVE</a></h3>

<hr>

<p> @2018 Developed by MJRoBot.org</p>

</body>

</html>

You can get the file index. html from my GitHub

camera.html

Basically, this page is the same that we created before, only that we are adding a button to return to the main page.

<html>

<head>

<title>MJRoBot Lab Live Streaming</title>

<link rel="stylesheet" href='../static/style.css'/>

<style>

body {

text-align: center;

}

</style>

</head>

<body>

<h1>MJRoBot Lab Live Streaming</h1>

<h3><img src="{{ url_for('video_feed') }}" width="80%"></h3>

<h3>{{ time }}</h3>

<hr>

<h3> Return to main page ==> <a href="/"class="button">RETURN</a></h3>

<hr>

<p> @2018 Developed by MJRoBot.org</p>

</body>

</html>You can get the file camera.html from my GitHub

style.css

body{

background: blue;

color: yellow;

padding:1%

}

.button {

font: bold 15px Arial;

text-decoration: none;

background-color: #EEEEEE;

color: #333333;

padding: 2px 6px 2px 6px;

border-top: 1px solid #CCCCCC;

border-right: 1px solid #333333;

border-bottom: 1px solid #333333;

border-left: 1px solid #CCCCCC;

}

You can get the file style.cs s from my GitHub

Creating the Web Server environment

The files must be placed in your directory like this:

├── camWebServer2

├── camera_pi.py

└─── appCam2.py

├── templates

│ ├── index.html

│ ├── camera.html

└── static

└──── style.css

Now, run the python script on the Terminal:

sudo python3 appCam2.py

Adding Video Stream on a Previous Project - OPTIONAL

Optionally, let's include the Video stream on the project developed on a previous tutorial

Let's use what we developed in my previous tutorial: FROM DATA TO GRAPH. A WEB JOURNEY WITH FLASK AND SQLITE.

As a remember, on that project we logged data (temperature and humidity) on a database, presenting the actual and historical data on a webpage:

We did this, using 2 python scripts, one to log data on a database (logDHT.py) and another to present data on a webPage via Flask WebServer (appDhtWebServer.py):

Creating the web-server environment:

Below I presented my directory view. Sensor_Database is under /Documents in my RPi:

├── Sensors_Database

├── sensorsData.db

├── logDHT.py

└── dhtWebHistCam

├── appDhtWebHistCam.py

├── camera_pi.py

└─── appCam2.py

├── templates

│ ├── index.html

│ ├── camera.html

└── static

├── justgage.js

├── raphael-2.1.4.min.js

└──── style.css

The files can be found on my GitHub: dhtWebHistCam

Below the result:

Conclusion

As always, I hope this project can help others find their way into the exciting world of electronics!

For details and final code, please visit my GitHub depository: Video-Streaming-with-Flask

For more projects, please visit my blog: MJRoBot.org

Saludos from the south of the world!

See you at my next instructable!

Thank you,

Marcelo